ROBOTS.TXT

ROBOTS.TXT, also known as a bot exception, is necessary to prevent search engine bots from accessing restricted crawl areas of your site.

In this article, I will show you the basics of URL blocking in ROBOTS.TXT.

What is a Robots.txt File?

Robots.txt is a text file created by webmasters to teach bots how to crawl website pages and let bots know if a file should be accessed.

You can block in-text URLs from bots to prevent Google from indexing private images, expired specials, or other pages that users are not willing to access. Using it to block a URL can help your SEO efforts.

When should I use a Robots.txt file?

You'll want to use one if you don't want search engines to index certain pages or content. If you want to use search engines (like Google, Bing, and Yahoo) to access and index your entire site, you don't need a robots.txt file. However, it is worth noting that sometimes people use it to direct users to the sitemap.

However, if other sites link to the pages of your blocked website, search engines can still index the URLs and thus still appear in search results. To prevent this from happening, use the x-robots tag, a bookmarklet, or a canonical link to the relevant page.

- Separate parts of the site: Think of admin pages or sandboxes for your development team.

- Prevent duplicate content from appearing in search results.

- Avoid index problems

- URL block

- Prevent search engines from indexing certain files such as images or PDFs

- Control crawl traffic and prevent ad files from appearing in SERPs.

- Use it if you're running ads or paid links that require specific advice on tires.

However, if you don't have areas on your site that you don't need to monitor, well, you don't. Google's guidelines also state that you should not use a robots.txt file to block web pages from search results.

This is because if other pages are linking to your site with descriptive text, your page may still be indexed due to serving on that third-party channel. Noindex guidelines or password-protected pages are your best bet here.

Getting Started With Robots.txt

Before you start putting together the file, you must make sure that no one is already in place. To find it, just add "/robots.txt" to the end of any domain name: www.examplesite.com/robots.txt. If you have one, you will see a file with a list of instructions. Otherwise, you will see a blank page.

It's also worth noting that you may not need a robots.txt file at all. If you have a relatively simple website and you don't need to block certain pages to test or protect sensitive information, you can do without it.

Setting Up Your Robots.Txt File

These files can be used in several ways. However, its main advantage is that marketers can allow or disallow multiple pages at once without having to manually access each page's code.

All robots.txt files will produce one of the following results:

- Allow fully - All content can be explored

- Full Denial – No content can be crawled. This means that it completely blocks Google's crawlers from accessing any part of your website.

- Conditional Authorization – The rules described in the file determine what content is open for exploration and what content is prohibited. If you're wondering how to reject a URL without blocking bot access to the entire site, this is it.

If you want to configure an archive, the process is very simple and includes some components:

the "user-agent", which is the bot, which is applied to the following URL blocking, and "disallow", which is the URL which desea to block. These two lines look like a single entry in the file, which means you can have multiple entries in a single file.

How to Block URLs in Robots txt

How to Save Your File

- Save the file by copying it to a text file or notepad and saving it as "robots.txt".

- Make sure that the file is saved in the top-level directory of your site and that it is in the root domain with a name that exactly matches the "robots.txt" file.

- Add your file to the top-level directory of your website's code for simple crawling and indexing.

- Make sure your code follows the correct syntax: User-agent → Disallow → Allow → Host → Sitemap. This allows search engines to access the pages in the correct order.

- Have all URLs you want "Allow:" or "Disallow:" put in their own line. If multiple URLs appear on one line, crawlers will have difficulties separating them and may run into trouble.

- Always use lowercase to save your file, because file names are case-sensitive and do not include special characters.

- Create separate files for different subdomains. For example, "example.com" and "blog.example.com" both contain individual files with their own set of directives.

- If you must leave comments, start a new line and then start the comment with the # character. # lets crawlers know not to include that information in their directives.

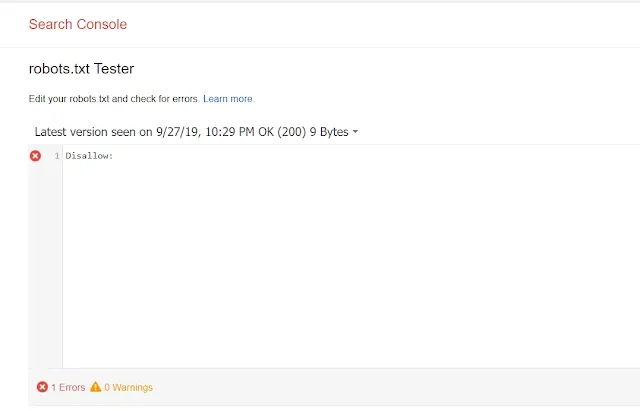

How to Test Your Results

In your Google Search Console account make sure bots crawl the parts of the site you want and block URLs you don't want people to see.

- First, open the test tool and examine your file for any warnings or errors.

- Next, enter the URL of a page on your website in the box at the bottom of the page.

- Next, select the user agent you want to simulate from the drop-down list.

- Click Test.

- The button should say "Accepted" or "Blocked", indicating whether or not the file has been blocked by crawlers.

- Edit the file, if necessary, and test it again.

- Remember that changes you make in the GSC test tool will not be saved to your website (this is a simulation).

- If you want to save the changes, copy the new code to your website.

No comments:

Post a Comment